Staying in Shape: Learning Invariant Shape Representations using Contrastive Learning

Published in Conference on Uncertainty in Artificial Intelligence (UAI), 2021

Recommended citation: Gu, Jeffrey and Yeung, Serena. (2021). "Staying in Shape: Learning Invariant Shape Representations using Contrastive Learning." UAI. UAI 2021. https://arxiv.org/pdf/2107.03552.pdf

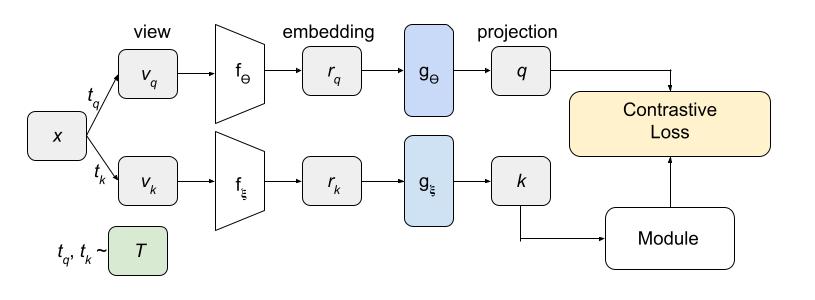

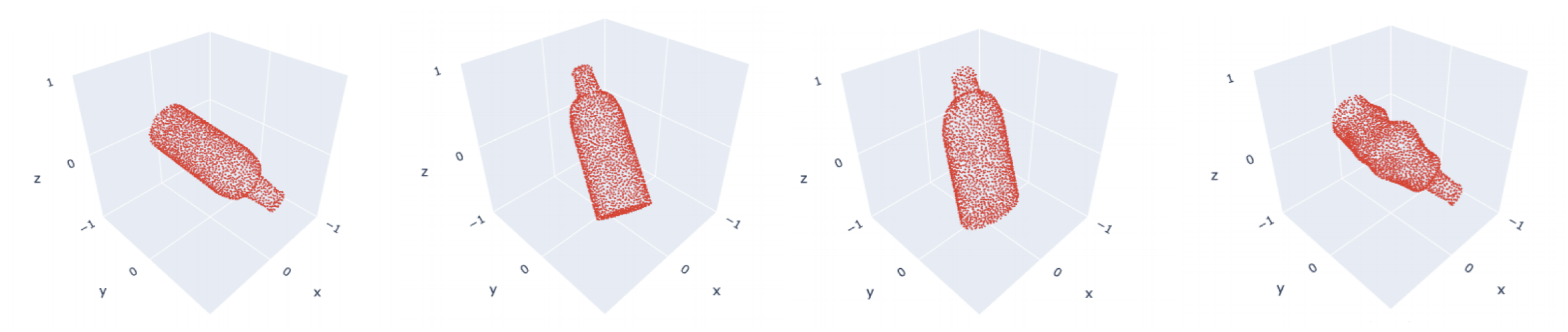

Abstract: Creating representations of shapes that are invariant to isometric or almost-isometric transformations has long been an area of interest in shape analysis, since enforcing invariance allows the learning of more effective and robust shape representations. Most existing invariant shape representations are hand crafted, and previous work on learning shape representations do not focus on producing invariant representations. To solve the problem of learning unsupervised invariant shape representations, we use contrastive learning, which produces discriminative representations through learning invariance to user-specified data augmentations. To produce representations that are specifically isometry and almost-isometry invariant, we propose new data augmentations that randomly sample these transfor-mations. We show experimentally that our method outperforms previous unsupervised learning approaches in both effectiveness and robustness.

Abstract: Creating representations of shapes that are invariant to isometric or almost-isometric transformations has long been an area of interest in shape analysis, since enforcing invariance allows the learning of more effective and robust shape representations. Most existing invariant shape representations are hand crafted, and previous work on learning shape representations do not focus on producing invariant representations. To solve the problem of learning unsupervised invariant shape representations, we use contrastive learning, which produces discriminative representations through learning invariance to user-specified data augmentations. To produce representations that are specifically isometry and almost-isometry invariant, we propose new data augmentations that randomly sample these transfor-mations. We show experimentally that our method outperforms previous unsupervised learning approaches in both effectiveness and robustness.

Recommended citation: Gu, Jeffrey and Yeung, Serena. (2021). “Staying in Shape: Learning Invariant Shape Representations using Contrastive Learning.” UAI. UAI 2021.