Capturing implicit hierarchical structure in 3D biomedical images with self-supervised hyperbolic representations

Published in Conference on Neural Information Processing Systems (NeurIPS), 2021

Recommended citation: Hsu, Joy, et al. "Capturing implicit hierarchical structure in 3D biomedical images with self-supervised hyperbolic representations." Advances in Neural Information Processing Systems 34 (2021): 5112-5123. https://proceedings.neurips.cc/paper_files/paper/2021/file/291d43c696d8c3704cdbe0a72ade5f6c-Paper.pdf

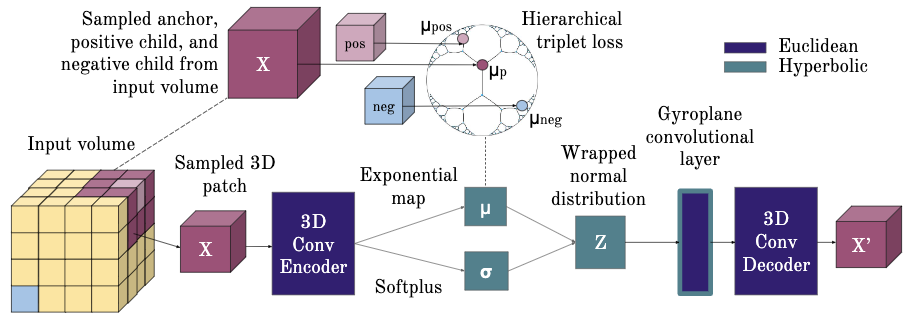

Abstract: We consider the task of representation learning for unsupervised segmentation of 3D voxel-grid biomedical images. We show that models that capture implicit hierarchical relationships between subvolumes are better suited for this task. To that end, we consider encoder-decoder architectures with a hyperbolic latent space, to explicitly capture hierarchical relationships present in subvolumes of the data. We propose utilizing a 3D hyperbolic variational autoencoder with a novel gyroplane convolutional layer to map from the embedding space back to 3D images. To capture these relationships, we introduce an essential self-supervised loss—in addition to the standard VAE loss—which infers approximate hierarchies and encourages implicitly related subvolumes to be mapped closer in the embedding space. We present experiments on synthetic datasets along with a dataset from the medical domain to validate our hypothesis.

Recommended citation: Hsu, Joy, et al. “Capturing implicit hierarchical structure in 3D biomedical images with self-supervised hyperbolic representations.” Advances in Neural Information Processing Systems 34 (2021): 5112-5123.

A previous version of this paper was published as “Learning Hyperbolic Representations for Unsupervised 3D Segmentation”.